Promoting fairness for under-represented languages in multilingual LLMs (2025-2026)

This project is funded by the University of Washington (UW) Global Innovation Fund (GIF) Research award for a UPCIté/UW collaboration to promote fairness for under-represented languages in multilingual LLMs.

PIs for University of Washington (UW) : Gina-Anne Levow and Richard Wright

PI for Université Paris Cité (UPCité) : Nicolas Ballier

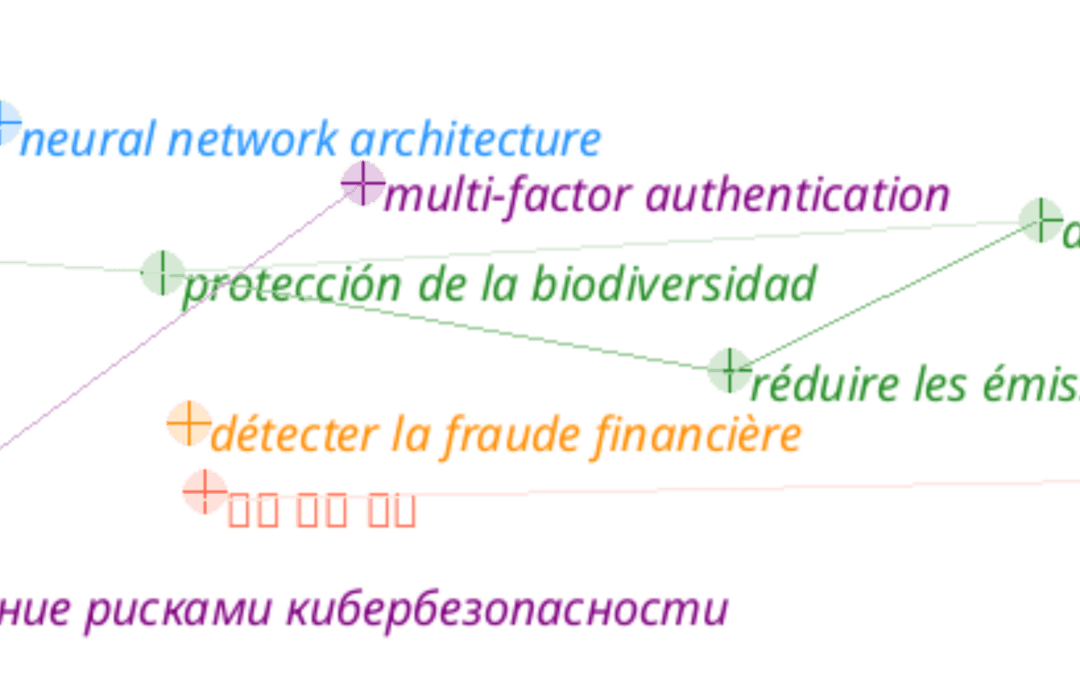

Of the 7,000 languages in the world, a handful are well-represented in language technologies, such as LLMs. Of those, English is vastly over-represented, and only a few dialects are represented. This sampling imbalance is especially acute for spoken language models, such as Whisper (Radford et al, 2023), where English alone accounts for more than ¾ of the training data. Underrepresentation in models leads to poor performance, leaving recent innovations in generative AI out of reach for most of the world’s population. Our collaboration will measure and minimize the training-data bias in multilingual language models like ChatGPT (OpenAI, 2023), Whisper (Radford et al., 2023), and XLM-R (Conneau et al., 2019). We want to address how the lack of training data adversely affects the representations for lower-resource languages, and seek to mitigate these harms through improved representations enhancing both task accuracy and model efficiency.

This first event focuses on audio large language models and highlight the role of speech tokenisers. We develop our case studies on Whisper and Wav2vec. A second event will be held in-person in fall 2025 at UW entitled “Speech and text LLMs revisited: under the hood investigation of multilingual models” with a mixed audience of linguists, engineers and computational scientists.

FIRST EVENT: Paris

Zoom session : 26 May 2025

zoom : https://u-paris.zoom.us/j/86356943682?pwd=llaCqKu8aOajFauHBU36X6DDMddZcb.1

password: 542839

room : 720 (seventh floor)

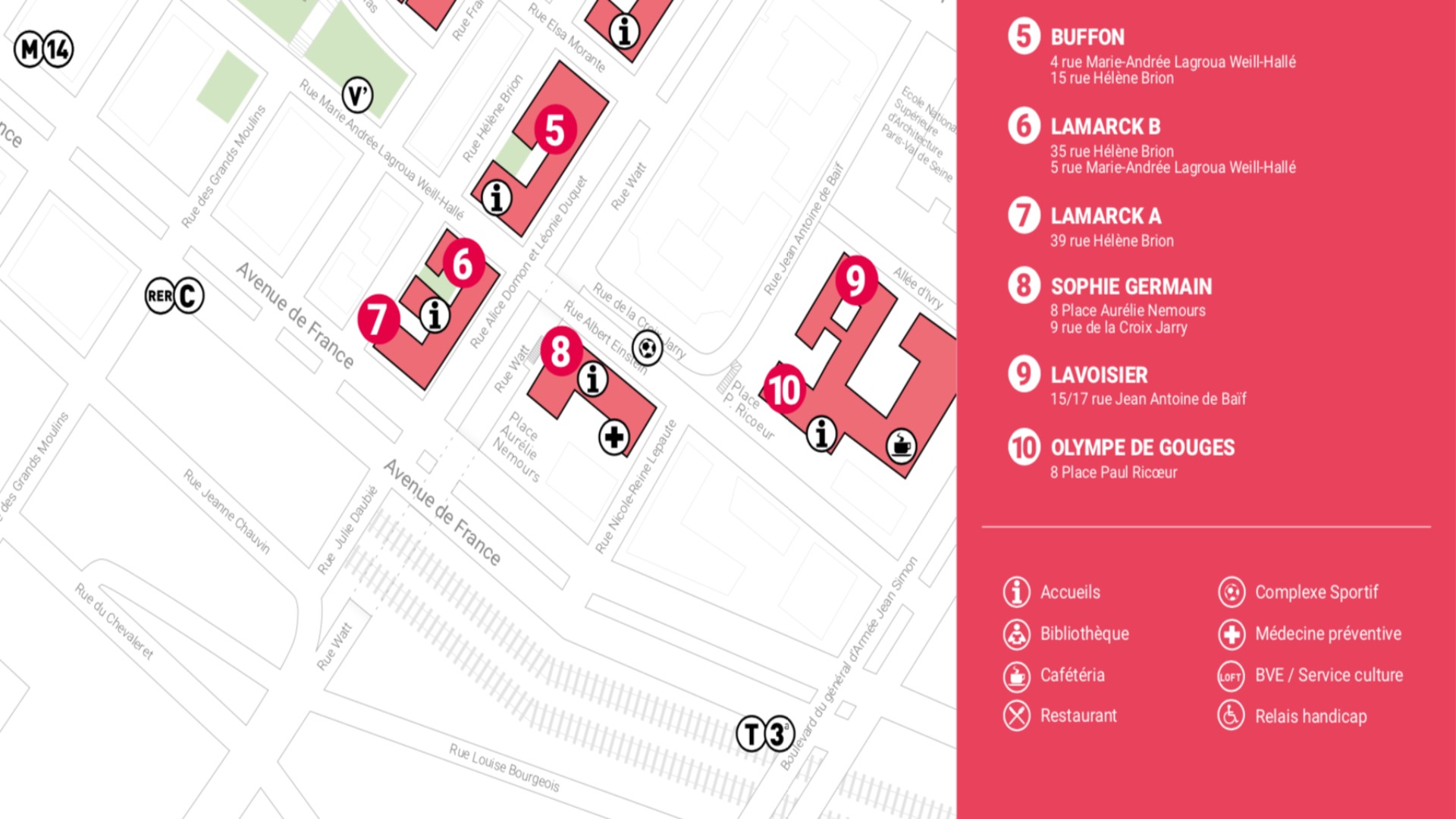

Bâtiment Olympe de Gouges

8 Place Paul Ricoeur

75013 PARIS

Accès au bâtiment Olympe de Gouges

Beyond Single Words 2025: the Interplay of phraseology, Multi-Word Units (MWU) and Complex Noun Phrases (CNP) in specialised discourses and AI-assisted translation

Journée d’étude internationale : dialogue interculturel – 19 juin 2025

19 juin 2025 9h à 17h salle 720 Bât. Olympe de Gouges Organisation : Jun Liu, Pasal Somé et Song Tan Contact : jun.liu@u-paris.fr | pascal.some@u-paris.fr Descriptif Intégrés au processus de mondialisation et en constante intéraction avec le monde extérieur, les pays...

Fostering Autonomy in Language Education: Focus on Teaching Practices

The 13th International Conference on Technical Communication

"Empowering Technical Communicators: Skills, Growth, and Education" March 7, 2025, 9h15-17h00 Salle 720 Bâtiment Olympe de Gouges 8 place Paul Ricoeur, 75013 Paris Contact Ismael RAMOS RUIZ Program 9h15 Welcome coffee. Registration and documentation. 9h45 Opening...

This workshop is intended to discuss various approaches to probing audio LLMs such as Whisper (Radford et al., 2019) or Wav2vec (Baevski et al., 2018).

FINAL PROGRAMME

(CET time, it’s 7h in Seattle when it’s 16h in Paris CET)

16h Nicolas Ballier (UPCité), Gina Levow & Richard Wright (UW) : introduction

We briefly present current research in the making on speech language models.

PART 1: METHODS

16h05 Guillaume Wisniewki (UPCité) Measuring distance with wav2vec & Whisper

16h20 Taylor Arnold (Richmond) Analyzing Whisper encoder outputs with UNICODE

16h30 Sara Ng (Western Washington University) Realigning Whisper output with human transcriptions

16h40 James Tanner (Glasgow) Analyzing Stops / VOT with Wav2vec

17h00 Discussion (coffee)

PART 2: CASE STUDIES

17h30 Behnoosh Namdarzadeh (UPCité) Whisper transcriptions for lesser-resourced languages : the case of Persian

17h45 Katelyn Mei (UW) & Anna Choi (Cornell) Careless Whisper

18h15 Gita Dhungana (UW) Nepali

18h30 Discussion (coffee)

19h00 Siyu Liang (UW) Analyzing Whisper decoder outputs across diverse language resource levels

19h20 Final discussion

19h50 closing remarks

20h end

SOME RESOURCES:

Ballier, N., Burin, L., Namdarzadeh, B., Ng, S., Wright, R., & Yunès, J. B. (2024, October). Probing whisper predictions for French, English and Persian transcriptions. In 7the International Conference on Natural Language and Speech Processing (Vol. 7, pp. 129-138) <hal-04547597>

Ballier, N., Arnold, T., Méli, A., Fullerton, T., & Yunès, J. B. (2024). Whisper for L2 speech scoring. International Journal of Speech Technology, 27, 923-934.

Koenecke, A., Choi, A. S. G., Mei, K. X., Schellmann, H., & Sloane, M. (2024). Careless whisper: Speech-to-text hallucination harms. In Proceedings of the 2024 ACM Conference on Fairness, Accountability, and Transparency, 1672-1681.

Namdarzadeh, B., & Ballier, N. (2024, draft). Audio LLM subtokens as encapsulated” knowledge”: the case of Persian subtoken graphemic representations in Whisper. In Grapholinguistics in the 21st century—From graphemes to knowledge.

Some links to scripts:

The C++ customised version of Whisper we used for encoder outputs is here: https://github.com/jbyunes/whisper.cpp

The alignment script between Whisper encoder predictions and final transcripts is available on https://github.com/statsmaths/paper-replication

The multilingual and English only Whisper subtoken dictionaries are available as .json files here : https://github.com/nballier/Whisper